Outcome-Driven Engineering

ODE to joy

The production and delivery of software has become increasingly central modern society. What was once the concern of tech companies some in the late 20th century is now of far broader relevance as almost every organisation today has some element of software development activity to support. Over this period, the way in which teams are organised to deliver software has changed significantly and yet relatively few outside the industry are familiar with the dynamics of this shift. A major driver for change has been the broad cross-industry shift away from a sequential or Waterfall planning to more iterative software development methods most notably of the Agile variety. The diagram below illustrates this transition from linear to circular.

The Agile software development movement was launched in the celebrated Agile Manifesto published in 2001 which is succinct enough to be contained entirely in a single screenshot:

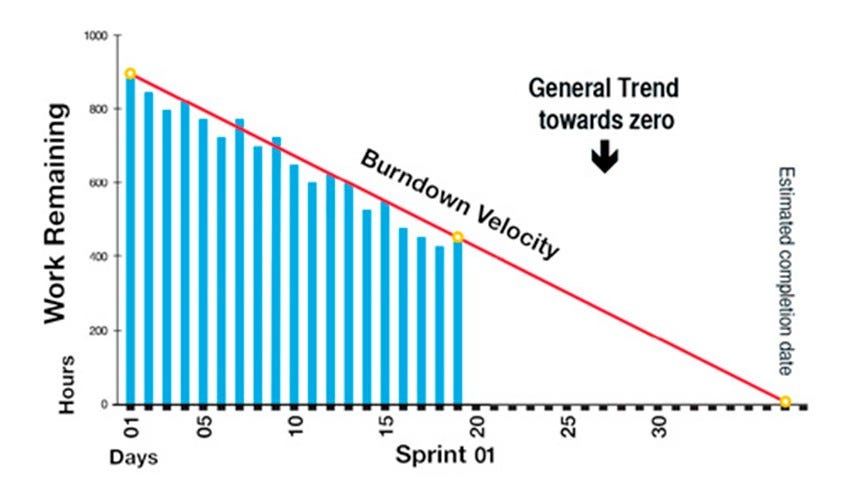

The benefits of Agile software development have been well documented and the approach is now mainstream across many businesses both small and large. The Agile Manifesto is not prescriptive on specifics so a number of alternative formulations have emerged to guide practitioners. Scrum is perhaps the most widely used and popular. The Scrum methodology is built around the partitioning of work into fixed period sprints typically two weeks long. These sprints are managed using a variety of ceremonies notably a planning session before each sprint and a retrospective afterwards. Progress is gauged using metrics such as velocity and work remaining. The basic idea being that the team burns down the work remaining to complete a software delivery on a sprint by sprint basis in a broadly predictable fashion as illustrated below:

As Agile methods like Scrum have become more normalised, a variety of issues relating to their implementation have emerged. Three key ones that have been highlighted by Agile skeptics are:

Cargo Cult Agile: This arises when the ceremonial aspects of Agile become the focus of more attention rather than the outcomes themselves. This is more likely to occur in environments that lack prior experience of the process. Examples include a stubborn insistence on daily stand-ups and the creation of specific niche roles and responsibilities in the organisation to support the process. Cargo cult Agile is often compounded by insufficient investment in staff training.

The term is now broadly used to describe behaviour where people mistakenly think they can summon some benefit by going through empty or unimportant motions. They don’t understand the real consequences or causes, but they try and get a result anyway. You can see this in science, in programming, and in agile software development. In fact, it is quite common indeed.

Over-fetishisation of metrics: A predictable consequence of Dataism becoming “rising philosophy” of the 21st century has been the over-privileging of Agile metrics as a source of ground truth on delivery progress. Of particular concern is the belief that it is possible to measure the productivity of a software delivery team through their engineering activity alone. The temptation to do so has increased in recent years as a swathe of Engineering Intelligence tools such as Code Climate Velocity and Haystack have hit the market that in theory allow managers to derive data-driven insights from the tools their teams use day to day. While there is value to be gained from looking at such data, you should be careful to ensure you are not introducing neo-Taylorism by the back door through vanity metrics that look good but aren’t important.

FAMGA envy: The five key Big Tech FAMGA (Facebook, Apple, Microsoft, Google, Amazon) cohort are increasingly driving the US stock market. Their power and wealth has grown hugely over the last decade fuelling increased interest in studying and understanding their methods, tools and practices. Many have sought to apply their methods, tools and practices to a local context in a form of tech envy. This may be a category error because the vast majority of organisations will never reach FAMGA scale. They will never have to worry about maintaining platforms that support millions of customers over multiple years with hundreds of engineers. Or have dozens of people working on a voracious talent acquisition pipeline. While there are some elements of their approaches that it is useful to learn from, there are limited returns in building your organisation to be a FAMGA clone.

Outcome-driven engineering is an approach to software development and delivery that builds on the positives of the Agile revolution while attempting to avoid some of the pitfalls. In many ways it involves going back to the basic inspirations encapsulated in the original Agile Manifesto. At the heart of the approach is a change in emphasis to more holistic systems thinking. The focus is on the effectiveness of a technical team in terms of its collective impact rather than adherence to specific methods and metrics in isolation. Outcome-driven engineering elevates customer feedback as the primary measure for assessing the effectiveness of delivery iterations. While there is value in technical leadership keeping abreast of standard Agile methods and metrics in use across their organisations, it is also vital leaders have a clear understanding of how and whether their teams are delivering customer value. This will become an increasingly important aspect of the new normal post-Covid of disembodied remote working.

If you work in software development and delivery, what does outcome-driven engineering mean and how do you go about applying it? The first thing to accept is that there is no simple recipe for creating an outcome-oriented engineering mindset in your organisation. A lot will depend on specific context. For instance, you may not have a direct channel to customers in which case you will need to find a proxy for measuring customer impact. This could be in the guise of a product manager who represents the voice of the customer and is passionate about their success. You may also only have a small number of staff involved in engineering in which case there will be limits to the degree of freedom you have in organising them. Practicing outcome-driven engineering is ultimately an exercise in making trade-offs across a range of elements listed below. A list of these elements is provided below. You should review each and make a judgement call on relevance for your specific context:

Organisation Design: it is important to be deliberate about how you organise your engineering delivery resources. In particular you need to be very clear on the roles and responsibilities around technical, people and product leadership. Pat Kua has some great material on this topic including this excellent post on the tech lead role. The article explores the pros and cons of combining technical and people management versus keeping these roles separate. Either approach can work depending on context. At Amazon the two responsibilities are almost always combined in a single Software Development Manager (SDM) role per this configuration taken from Kua’s article:

Programmatic teams: these should be the irreducible units of ownership and delivery that your engineering organisation is built around. These are durable entities of some 6-8 multi-disciplinary staff with a single technical leader, strong ownership boundaries and total responsibility for codebases that have a long-term importance to the organisation. Clear domain separation is the most important part of engineering organisation design. If you partition your teams as programmatic teams along the right domain boundaries, you should not find you are reorganising your teams every six months. You also provide the capacity to allow concurrent support for new feature delivery as well as support for existing features including on-call engineering to ensure customer escalations are addressed as rapidly as possible.

Autonomy: programmatic teams need to be given significant latitude to determine how they address customer problems in terms of self-organisation and technology strategy. This helps to build ownership from within. However, autonomy must exist in harmony with standardisation and getting that balance right is difficult to achieve and a frequent source of friction in scaling engineering organisations.

Standardisation: where it makes sense to do so, programmatic teams should standardise on key aspects of their development processes. This helps avoid waste and support economies and generate a faster return on investment. Engineering tools and practices have converged to a standard form over the last few years. Many organisation are now using version control systems like GitHub and employ models such as Git flow or trunk-based development. They almost all use JIRA for issue tracking and tools like Jenkins or CircleCI for supporting test automation. It’s beneficial to take this approach further though and build custom developer experience (DX) tooling to assist with the generation of standard codebases. A good example is Spotify’s Backstage developer portal. There is a downside to standardisation. Teams can incur technical debt over time as new methods and tools become available if they fail to update their standards. Will Larson’s Magnitudes of Exploration article provides some guidelines for navigating the terrain:

I am unaware of any successful technology company that doesn't rely heavily on a relatively small set of standardized technologies. The combination of high leverage and low risk provided by standardized is fairly unique.

Standardization is so powerful that we should default to consolidating on few platforms, and invest heavily into their success. However, we should pursue explorations that offers at least one order of magnitude improvement over the existing technology.

Calibration: another example of standardisation that is a key enabler for providing transparency for staff on norms around individual performance is an Engineering Growth Framework (EGF). Also known as a career ladder, an EGF transparently outlines expected behaviours and competencies at different levels within the engineering organisation thereby providing clarity to everyone on promotion criteria. An EGF also applies to technical managers and ensures they are well calibrated across the organisation. Progression.fyi offer a collection of public EGFs you can study for inspiration.

Alignment: Coordination is essential when multiple programmatic teams are working on delivering software that needs to work in harmony. There are two key mechanisms that can be put in place to support cross-team collaboration. The first involves hiring and growing Technical Program Managers (TPM) whose job it is to ensure the various deliveries work together as expected. The role and its responsibilities are articulated well in this Facebook engineering post:

TPMs are responsible for seeing programs through from beginning to end, ensuring a better workflow and more effective communication. They are a diverse group with a wide range of backgrounds, but they share common characteristics: a love for execution and a knack for doing whatever is necessary to see a program to completion.

TPM: A good TPM can make the difference between success and failure of major projects. TPMs are very common in FAMGA outfits but much less prevalent in other companies. The second mechanism is to align teams on common goals. OKRs (Objectives and Key Results) are perhaps the most commonly used tool for supporting this. They are set from the top down to provide alignment across different programmatic teams. There are many resources available on how to construct OKRs. Filipe Castro’s site is a good starting point. Done well, OKRs can provide clear direction on business goals and incentivise teams to work together and cooperate for the common good. All too often, though, especially in smaller organisations, OKRs are not implemented or managed effectively and lapse into cargo cult territory. In these environments they can end up becoming counterproductive and you may be better off replacing them with something as simple as an email list of priorities. This post by Marty Cagan outlines why empowering teams to address objectives is arguably more important than how you construct them. Ensuring that OKR activity is dialled down to your context and moving to support cross-team projects through a TPM will likely produce better outcomes.

There is so much widespread confusion out there on OKR’s, it’s a very difficult topic to discuss because everyone brings their own very different experiences and perspective to the conversation.

Metrics: productivity and velocity metrics alone are not a measure of customer value so they cannot and should not be used as a proxy for impact. That said, certain metrics relating to cycle time, change failure rate and MTTR (mean time to resolution) are useful to track on a per team basis. The importance of these metrics was promoted in Accelerate, a definitive book on the topic published in 2018. Team level health metrics are also helpful to monitor. Spotify provide a good team health check template as a starting point. Measuring impact may require product and engineering leaders to go further and build higher level synthetic metrics specific to their context. Will Larson provides a great example here in relation to computer security:

imagine creating a "server security grade", where you assign every server a letter grade from "A" to "F" based on its input metrics. The particulars are a bit orthogonal here, but perhaps a server with an "A" security grade would be less than seven days old, running the latest operating system, and have very limited root access. Each day you'd assess a security grade to every server, and then you would be able to start tracking the distribution of servers across letter grades.

He also cautions against excessive focus on measurement suggesting it can lead to a form of metrics fatigue. Better outcomes may be achieved by creating one inspirational “Saint-Exupéry” metric which may well be synthetic that “tells the whole story of why we're doing this”:

Is it the case that every project is genuinely motivated by a single metric? Well, no, of course not. Almost every great project is motivated by a number of different concerns … but I think it's usually possible to narrow down to one compelling metric that goes a surprisingly long way towards capturing the project's spirit.

Feedback: a greater focus on customer feedback and customer relationship management may be necessary to ensure you are heading in the right direction in terms of outcome. This could involve creating a dedicated Customer Success function in your organisation and establishing clear escalation mechanisms and contact points for customers to notify you when things go wrong. It may also involve introducing methods like net promoter score (NPS) surveys with customers. Introducing a Customer Connect program that directly exposes engineers to customer experience is invaluable for developing a customer-centric mindset.

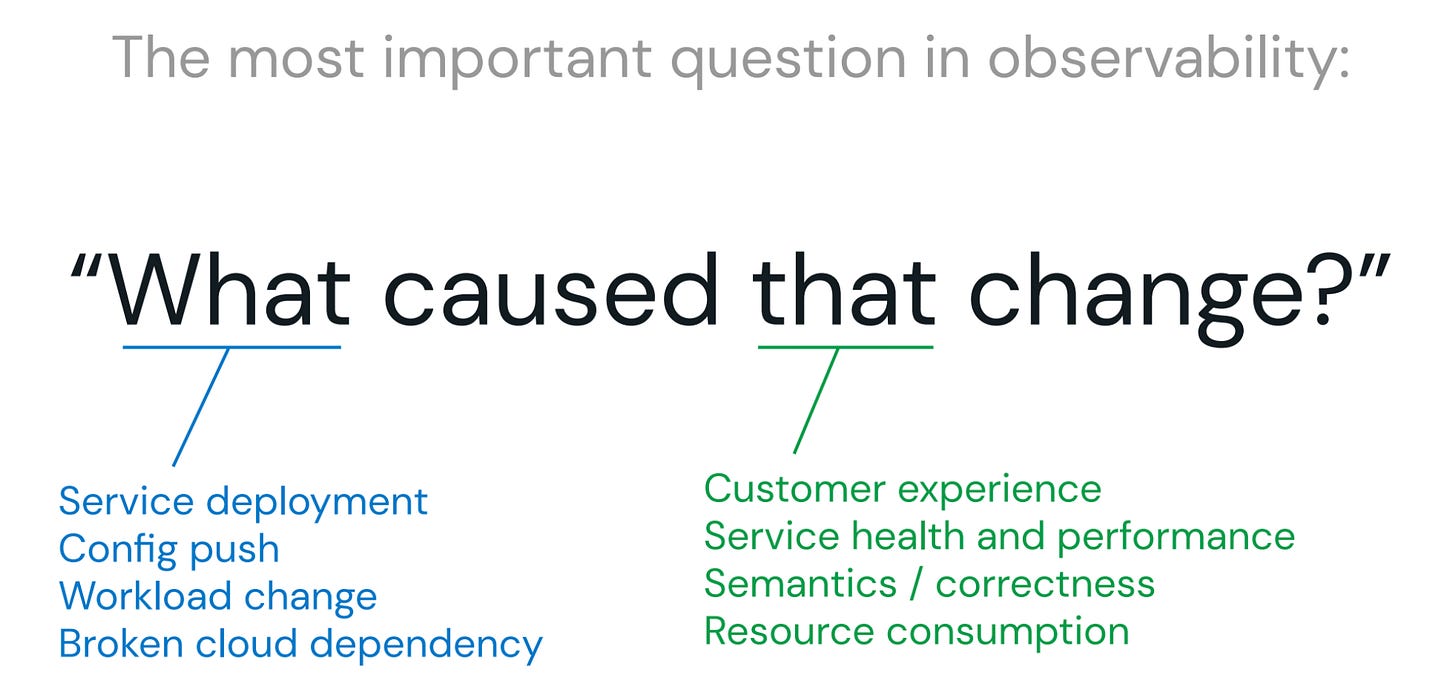

Monitoring and Observability: it’s important to ensure you are able to assess if your team is on track with progress on delivery. There are two aspects to this depending on the orientation of focus. Monitoring is more about the internal aspect and making sure teams are clear about goals and delivery objectives both short and long term. You can view it as a form of health check. Observability is more about the external aspect and determining that the customer is receiving value per iteration. This relates to the previous point about soliciting their feedback. There is an analogy here with a car journey in which the Objective is for the customer to go from A to B with progress to regularly spaced milestone markers along the way representing Key Results. Monitoring is measuring whether the car is performing as expected during the journey. Observability is checking we are on track with milestone progress. The concept can be extended to the role of team leadership:

instead of measuring productivity, we should instead invest in finding, hiring, and growing managers who can observe productivity as part of their daily work with developers

Both Monitoring and Observability are also crucial in the context of software once it has been delivered and is in production. They work in tandem to allow teams to detect and then respond to customer incidents as explained in greater detail in Titus Winters book, Software Engineering at Google. Winters is responsible for this graphic:

The list above is meant to be a starting point to allow you to understand where the focus of attention is in outcome-driven engineering. Ultimately, however, it isn't simply a matter of implementing a checklist of mechanisms. Building an outcome-driven engineering organisation requires buying into and cultivating the corresponding mindset. The key aspects of that mindset are:

Agility: A focus on outcome is inherently agile. It forces a greater emphasis on test and learn methods versus detailed upfront specification. Teams must be encouraged to conduct supporting experiments not all of which will yield positive results. Leaders must also be egoless and prepared to change their minds based on new evidence.

Curiosity: related to the previous point, the freedom to fail and try again in support of outcome is crucial and that requires a blame-free learning-oriented culture.

Trust: trust is foundational in outcome-driven engineering. An essential aspect of autonomy is that you have to trust the team to interpret and deliver on their team goals. These will typically need to be aligned across multiple teams typically though being captured in collectively owned OKRs.

EQ: it is necessary but not sufficient to have IQ alone. Leaders also need to possess emotional intelligence or EQ. They must have the ability to understand, empathise and connect with their teams.

Outcome-driven engineering ultimately connects engineering effort directly to business value. It does so by focussing on organisation design, team alignment, context appropriate measurement of value and obtaining direct, unvarnished feedback from the customer. The emphasis on customer obsession essentially represents a form of radical candour for business.

While the approach feels like a reasonable collection of sound practices, it’s important to signal a note of caution. There are potential downsides to adopting an outcome-driven engineering approach. It may not make sense if your engineering function is so small that it falls below a single 6-8 person programmatic team boundary. It is also often the case that investment is required to support the integration of corresponding mechanistic enablers. For example, tech teams may find they need to add a feature flagging tool or introduce A/B testing to efficiently test different customer treatments. Furthermore not all businesses have customers that directly fit the bill. In particular, product managers in B2B organisations will need to work hard to develop a proxy customer sensibility. Arguably the biggest impediment, however, is the cultivation of the mindset shift that is needed to shift to a new way of working. This requires buy-in from the very top of the organisation. The best way of ensuring you get that is to focus on the business case and ensure all stakeholders understand that outcome-driven engineering is ultimately all about optimising for business value.