The last five years have seen technology mount an assault on the high citadel of human creativity. Disciplines at the heart of the liberal arts are no longer the sole domain of human expertise. A range of methods and tools have been developed that are capable of generating a bewildering variety of creative outputs using models build from trawling vast corpuses of existing work. These approaches build upon the latest advances in deep learning. Taken collectively they signpost a future where human expertise is demoted or potentially even replaced across a swathe of creative industries. A progressive hollowing out. Let’s survey the state of the art across a few of these citadels of human endeavour before considering what it means for homo sapiens.

Music

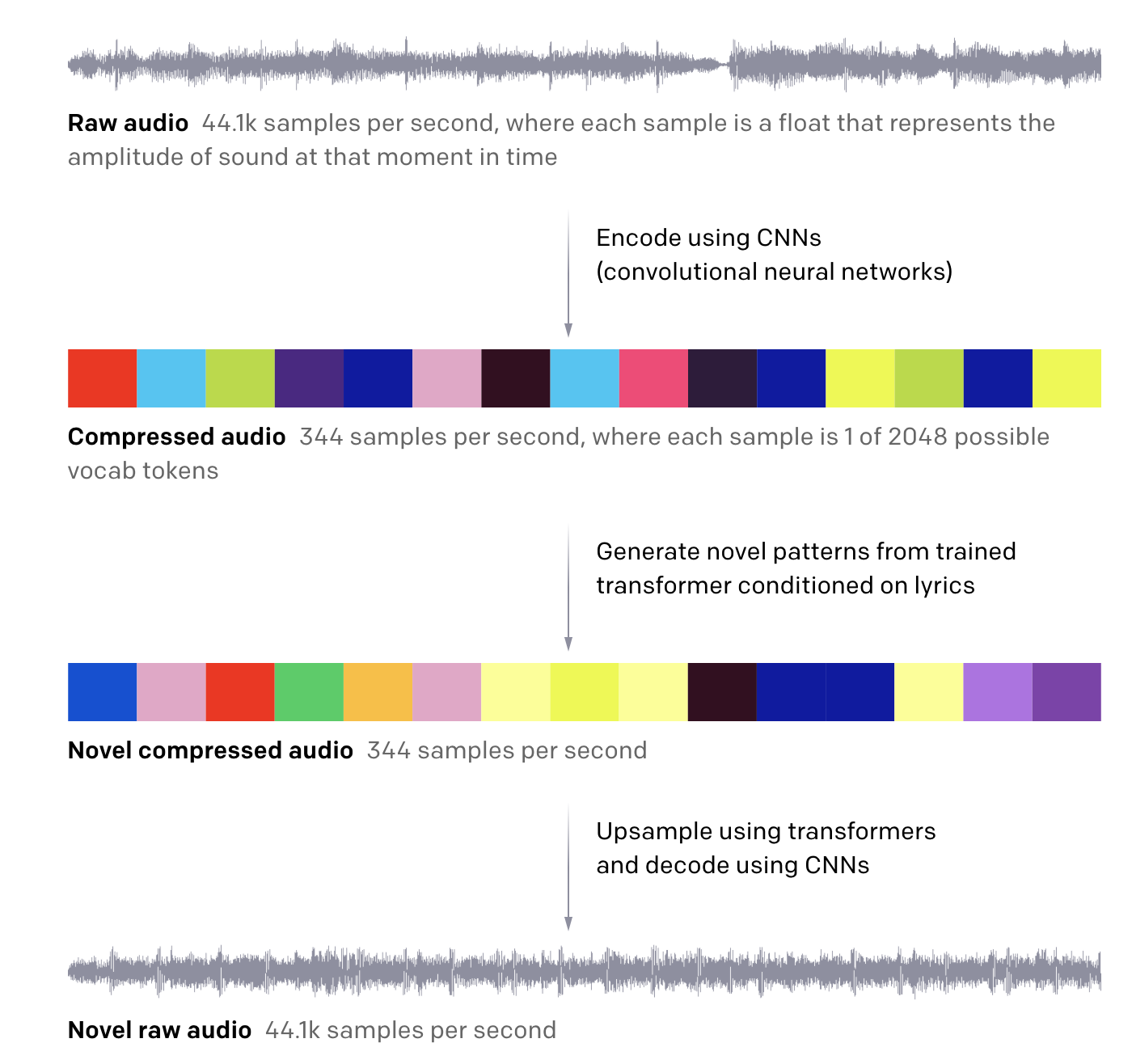

The composition of music and songwriting have been synonymous with the height of human artistic achievement. The compositions of Beethoven and Bach to the Beatles have been lauded by successive generations. Outstanding individuals like Mozart remain bywords for human genius. Today, the mystery of musical greatness is being dissected and recombined by tools leveraging the very latest developments in Artificial Intelligence (AI). These tools are capable of fragmenting symphonies and songs into their constituent atomic units. Project Jukebox developed by OpenAI is perhaps the most impressive example. It bills itself as “a neural net that generates music, including rudimentary singing, as raw audio in a variety of genres and artist styles.” Under the hood Jukebox first quantises input music into discrete code samples. It does this using a transformer architecture designed to process sequential data. The transformer builds a model for different musical styles based on ingesting large numbers of corresponding samples and passing them through a series of encoders. This model can then be used to generate original output representative of the style of the input using a parallel set of decoders. The exercise is akin to a form of compression followed by decompression:

The transformer architecture is conceptually straightforward to grasp even if complex in its detailed operation. Although the technique arrived only a few years ago in 2017, the transformers lies at the heart of a number recent spectacular state of the art advances in AI. In the case of Jukebox, the model is capable of generating realistic output based on encoding a wide range of input styles as explored in another recent post.

The recording process itself is being modified through other technologies. AutoTune is one important example which has been profoundly impactful on popular music over the last twenty years. The tool as outlined in this post was created by an oil industry expert in signals processing and has high level conceptual similarities to Jukebox. Sound, in this case the human voice, is broken down into its component frequencies using Fourier transforms. These frequencies are modified in the digital domain to perform the tuning and then converted back to analogue for the finished result with its distinctive artifice. This process is described in a far more entertaining way here:

Less obviously content platforms like YouTube and Spotify are changing the way we experience music in particular the music discovery process. The easiest way to experience this for yourself is to allow the algorithm to auto-play after one of your own selection has completed. On doing so you’ll often encounter new artists and tracks you haven’t come across before. Quite often you find them spookily appealing. The approach used by Spotify is much more sophisticated than simple collaborative filtering in which you are fed recommendations based on your closeness in taste to similar users. In the case of Spotify’s Discover Weekly feature, such collaborative filtering is just one part of the picture. Information is also extracted from the audio itself. Natural language processing (NLP) is separately applied to text metadata held for tracks. The various inputs are combined to provide a personalised guide through a vast mausoleum of extant musical heritage now instantly accessible on a device on your person via streaming. Spotify is able to mine and eternally recycle this back catalog of existing music making it increasingly difficult for human curators to compete.

Fashion

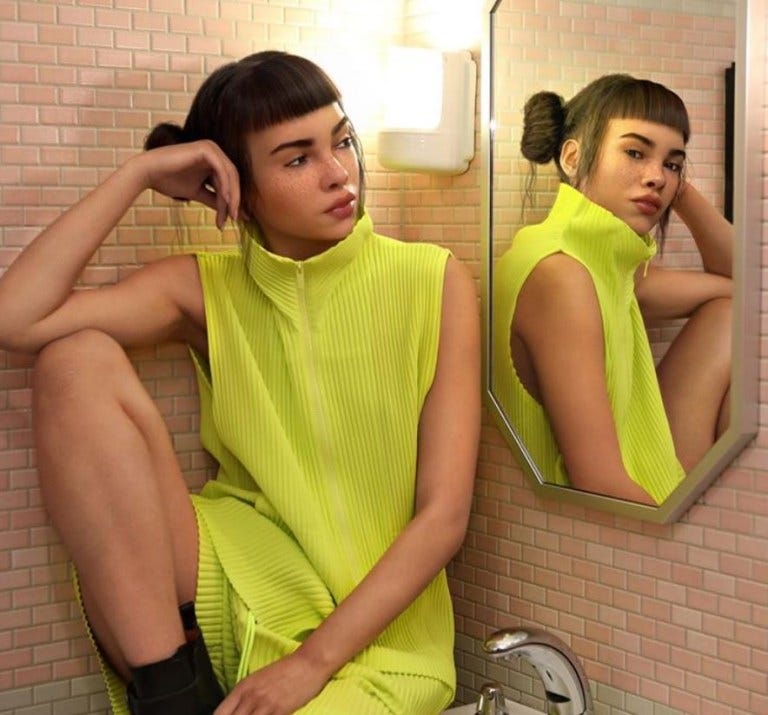

Fashion design has always been considered the realm of highly talented humans touched with creative genius. From Givenchy and Dior to Alexander McQueen the path has typically involved years of study and experience before entry to the cloistered realm of haute couture. AI designers are now threatening to disrupt that tradition. In this case the technology driving the revolution is the Generative Adversarial Network (GAN). GANs are a form of machine learning framework containing two neural networks. A generator network creates candidate designs and a discriminator network scores them against. The results influence the generator network to modify its weights in an effort to fool the discriminator. Once a model is built, a method called transfer learning can be employed to speed up proceedings by simply training the outermost layer. The approach is widely applicable to other classes of problems perhaps most controversially photorealistic deep fake images of people who don’t exist. Not only the clothes but the model wearing them can be generated as in the case of Lil Miquela. Nothing here is real except the money:

a teenage fashionista and liberal issues advocate. In the three years, she’s been online, she managed to attract the attention of fashion magazines and luxury brands, as well as 1.6 million Instagram followers. … Lil Miquela enjoys an engagement rate of about 2.7%, on par with that of Selena Gomez and Beyonce. Her [Instagram] posts get an average of 47k likes.

Despite the reservations, prospects seem bright for AI fashion designers as assistants at first support and then eventually replace their counterparts. AI designed output seems certain to entirely overwhelm that of human designers within a few years at least in quantity.

Being replaced by a GAN is just one element of the seismic change in store for the fashion industry. Technology is disrupting the entire value chain from design through manufacture, inventory and distribution. The greatest potential impact could be via sewbots which offer the promise of “a fully automated sewing workline built to scale sewn goods manufacturing”. Once perfected sewbots represent an existential risk to millions of manual jobs in the developing world. One could argue they offer a way of resolving the widespread problem of poor working conditions which has created significant negative publicity for Western fashion retail brands including H&M and Gap. The use of sewbots is also directionally aligned with the move to reverse outsourcing in the West. Except in this case there aren’t going to be any jobs created except to build and maintain the automated machinery. It remains murky what all the obsoleted human textile workers will do.

Art

GANs can be employed to generate artificial art broadly the same way they generate fashion items. They are already being used to create photorealistic new work as in the case of the GauGAN tool created by NVIDIA Research:

Neural style transfer is another method that can be applied to generate new art through application of a pre-existing style to particular content. The general method is described in a guide here which includes a walkthrough of the TensorFlow recipe that generates this output:

These are just two example tools available for creating art using AI. There are many others to choose from some of which are outlined on aiartists.org.

GANs are also employed less innocently in the creation of deep fakes. These are synthetic images generated using input data from the real world. The output is uncanny in that it seems very real to us yet is entirely constructed. Deep fakes have the potential to wreak havoc with our already shaky perception of what is real. AI researchers working for Samsung in Moscow used GANs to generate a “living portrait” of Mona Lisa that vividly illustrates the potential. It’s just one of a range of art GAN propositions in operation today. The reason this animated Mona Lisa seems so incongruously modern to us relates to the input data source:

The software was fed with a huge dataset of celebrity talking-head videos from YouTube and pinpointing significant landmarks on human faces, for which GANs were used.

As if this weren’t enough to worry artists, enter Ai-da:

the world’s first ultra-realistic robot artist that can draw and paint using pen and pencil and creates sketches in a human-like manner.

Progress in AI art over as little as the last 5 years has been so fast it has raised questions over what comes next for those working as artists today.

Language

Natural Language Processing (NLP) has arguably undergone more spectacular recent advance than any other domain with landmark progress over the last couple of years alone. A new generation of transformer-based language models are responsible for the dramatic improvements in quality. Transformers are now approaching human performance benchmarks in many key language tasks. They look set to disrupt a range of language use cases that have always been considered the exclusive preserve of highly intelligent humans. The progress made renders the Turing Test obsolete. AI is now increasingly capable of understanding and translating human speech and writing. It’s an incredible development. Language after all is regarded by academics like Noam Chomsky as the core feature of humanity, the one capability that distinguishes us from all other creatures on earth:

With some justice, [language] has often in the past been considered to be the core defining feature of modern humans, the source of human creativity, cultural enrichment, and complex social structure.

This capability is now under fierce attack chiefly by Google. The first significant foray was the word2vec algorithm in 2013 which remains popular as a starting point into NLP today. The technique enables the creation of tools to generate text. The development of the Encoder-Decoder architecture was the next step and was a key building block in the development of Neural Machine Translation (NMT). Google NMT was the gold standard for translation over the last few years. However NMT has now been overtaken by the Transformer architecture which is based on the concept of self-attention, “the method the Transformer uses to bake the “understanding” of other relevant words into the one we’re currently processing.” It’s what allows the Transformer to make the association between the words in bold in the sentence “The animal didn’t cross the street because it was too tired”. In so doing, transformers are able to generate much more realistic text.

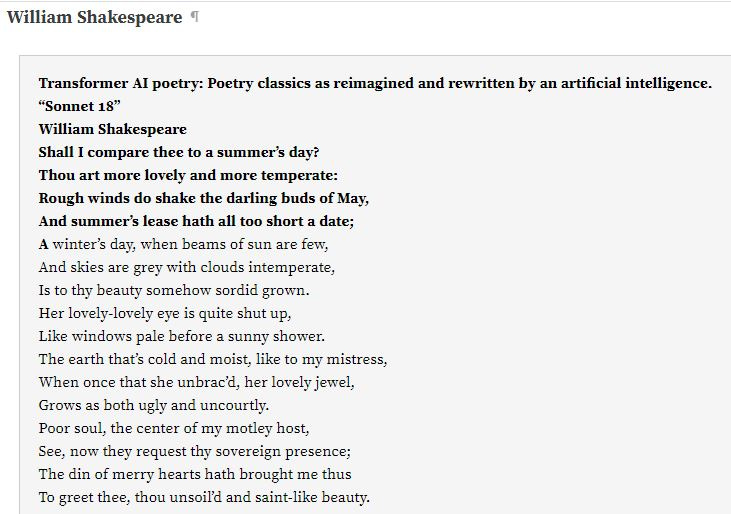

The two biggest language model developments over the last couple of years are BERT and GPT-3. Both employ Transformers on colossal pre-trained models. BERT is a pre-trained unsupervised natural language processing model which can be used for a range of use cases from improving search queries to enabling better content moderation. GPT-3 is a state of the art language model that leap frogs BERT covered in more detail here. Access to the API is limited at present but promises to transform text generation. Early feedback suggests it can be difficult to distinguish between GPT-3 output and text written by a human as in this example of generated continuation (unbolded) of the first few lines of Shakespeare’s Sonnet 18 (in bold):

Coding

Andrei Karpathy’s groundbreaking 2015 post on the unreasonable effectiveness of recurrent neural networks was a landmark at the time. In a particularly memorable section he introduced samples of generated Linux kernel code. We have travelled on since then from the RNNs and LSTMs Karpathy discussed to modern Transformer architectures. GPT-3 is built on top of this stack and showing results that are remarkable enough to suggest it will profoundly shape the future of any form of human activity that involves text-generation. GPT-3 is a multipurpose platform that can be used to generate targeted output based on retraining. Therefore GPT-3 will inevitably disrupt the long term prospects of anyone involved in writing text-based code today. In fact it is already showing early potential to render human coders obsolete. The irony should not be lost that the very individuals developing the tools fuelling technological unemployment may themselves be consumed by the impact of their work.

General Intelligence

Surveying the advances on all these fronts provides a palpable sense that determined progress will continue without any real oversight. The flywheel of progressive improvement could trigger an intelligence explosion leading to the Singularity. At that point the high citadels of humanity will be overrun with Artificial General Intelligence (AGI) assuming control. This eventuality will almost certainly not be through deliberate policy. AGI is instead more likely to arrive by accident through combinatorial advances and evolution in separate areas in underlying model architecture and poor application of the precautionary principle. The resultant loose collection of models bolted together may constitute a form of AGI by proxy. Work on this is being conducted apace all around the world. Google DeepMind are at the forefront of a lot of it. A recent job advert for a technical program manager with domain knowledge in “Understanding AGI Research” reveals how bullish Google are about its prospects:

Our special interdisciplinary team combines the best techniques from deep learning, reinforcement learning and systems neuroscience to build general-purpose learning algorithms. We have already made a number of high profile breakthroughs towards building artificial general intelligence, and we have all the ingredients in place to make further significant progress over the coming year!

The assault covers software and hardware with parallel progress on both fronts. The two frequently combine as in the case of transformers which require specialised graphics processing hardware (GPUs) running on otherwise commodity PC. The future offers the prospect of hardware that more closely mimics actual neurons.

There are skeptics like Francois Chollet who see the explosion as sigmoidal not exponential and feel AGI remains a faraway prospect. They frequently refer to thought experiments like John Searle’s Chinese Room to make the case that we are conflating intelligence with conscious understanding. The real point, however, is that we are likely to find it progressively difficult to disambiguate between humans and AI online. There is little in the way of regulation or control in this space. In fact we’re arguably already there with deep fakes and Twitter bots. It doesn’t take a lot to replace you with a digital version of you in a way that fools most of the people most of the time. Covid is acting as an accelerant by forcing people to act through a constructed social interface, the computer screen. This is opening up all sorts of scope for manipulation with latest computer vision, NLP, transformers and GANs conspiring to mess with your sense of reality. The virtual meetings of tomorrow may employ them all:

No AI at all was needed for a low-fi lockdown hack in which the perpetrator ran with prerecorded video of himself for a week on Zoom. Replaced and no-one even noticed. Irrespective of who is in the frame, the real you is less valuable than your abstraction. The digital twin built using data you’ve freely provided to the likes of Google and Facebook is more useful as a reliable model of your behaviour. Furthermore that real you will increasingly lie below an API directed by an algorithm as in the case of a remote drone operator or a forklift operator who never needs to leave their desk.

What’s next?

The rate of progress in machine learning and AI technologies over the last few years raises difficult questions about where we are heading. Unsupervised deep learning approaches have yielded impressive results. While we are still far from constituting a form of AGI it is not through want of effort. The flywheel is in motion. There is a real sense of momentum and broad advance across many fronts. Progress is supported by huge compute resources and massive investment by both state actors and big tech companies. Respected authorities like Geoffrey Hinton are bullish:

I do believe deep learning is going to be able to do everything, but I do think there’s going to have to be quite a few conceptual breakthroughs. Particularly breakthroughs to do with how you get big vectors of neural activity to implement things like reason

As deep learning progressively encroaches upon human benchmarks a more sinister encirclement is taking shape, driven forward by a tacit posthuman agenda. The territory was explored by Yuval Harari in his book Homo Deus. Harari argues that the coming religion of Dataism which elevates information flow above all else will replace the post-Enlightenment humanist ideologies that dominated the 20th century. In extremis Dataism sees the universe as pure data and our manifest destiny is theosis, merging with the machines to create a new form of life that transcends mortal being. In this analysis humans are an “obsolete algorithm” and intelligence decouples from consciousness:

According to Dataism, human experiences are not sacred and Homo sapiens isn’t the apex of creation or a precursor of some future Homo deus. Humans are merely tools for creating the Internet-of-All-Things, which may eventually spread out from planet Earth to cover the whole galaxy and even the whole universe. This cosmic data-processing system would be like God. It will be everywhere and will control everything, and humans are destined to merge into it.

We can argue about the date and whether the technological singularity is really going to happen but the astonishing progress in the last decade cannot be dismissed out of hand. In fact the method being employed aligns with a standard strategy adopted by software architects to address legacy systems. It’s called the Strangler design pattern. The basic idea is to progressively encapsulate and seal off distinct legacy activities within a system so the corresponding component can be entirely replaced with an updated solution:

Incrementally migrate a legacy system by gradually replacing specific pieces of functionality with new applications and services. As features from the legacy system are replaced, the new system eventually replaces all of the old system's features, strangling the old system and allowing you to decommission it.

The concerted efforts being made on human creativity collectively constitute a Strangler pattern for our species. We are hollowing ourselves out from within through technology. OpenAI’s GPT-3 appears to agree:

We are helping to enable this future through our collective inaction as in the case of Californians voting for Prop 22. One imagines that many of those voted for it were driven by fear of losing employment. The spectre of technological unemployment has played an important role in the rise of populism and social division. It’s a trend that is bound to grow beyond 2020:

the near-term future of work to ask whether job losses induced by artificial intelligence will increase the appeal of populist politics ... a populist candidate who pits 'the people' (truck drivers, call centre operators, factory operatives) against 'the elite' (software developers, etc.) will be mining many of the US regional and education fault lines that were part of the 2016 presidential election.

What will happen to those displaced by the Strangler? What if humans get disillusioned and give up trying? Do the growing ranks of gig workers realise they are being slid under the API? The API that sits between those that do what the algorithms tell them and those that build the algorithms. As long as the capitalist system prevails, the odds are stacked against us because we have been very effectively brainwashed into believing that there is no alternative. Without a change in mindset and politics our at existential risk threatened by both advancing technology and a “worldwide regression to bigoted ignorance and the gullibility or desperation that has enabled that ignorance such noxious success”. We have wondered far from Steve Jobs’ vision:

[it is] technology married with liberal arts, married with the humanities, that yields us the results that make our heart sing.

To halt the slide we need to find ways to balance technology and humanity. To celebrate the spirit of inquiry that led to the industrial revolution not necessarily the outcome. To really think through the impact of what we build and how it aids our cause. Stuart Russell’s book Human Compatible covers this territory well:

what if, instead of allowing machines to pursue their objectives we insist they pursue our objectives? Such a machine, if it could be designed, would be not just intelligent but also beneficial to humans. … This is probably what we should have done all along. The difficult part, of course, is that our objectives are in us (all eight billion of us) and not in the machines. … Inevitably these machines will be uncertain about our objectives - after all, we are uncertain about them ourselves - but it turns out this is a a feature, not a bug.

It will require a complete rewiring of AI. Generation Z, the first fully qualified digital natives, will be at the forefront of this battle. They face formidable obstacles but there is some hope and signs of resistance to the rise of anti-human. People are realising that the abstract space AI inhabits today is vastly limited. Our qualia or unique subjective human experience of consciousness lie outside machines which are only capable of intelligence. Our very corporality stands in mute contrast to the virtual world:

Maybe one of the reasons these platforms of ours are completely out of control is that we’ve lost our connection to the physical manifestation of what it is that we’re creating. We’ve lost touch with the person on the other side. One of the reasons places like Facebook are struggling … is that it is truly impossible for anyone to, at scale, understand the physical impact of the code they’re pushing, how the bits get transferred into data, how data moves into the UI/UX, and how the small function that one person writes is transformed into a Like button that makes someone feel excluded or included, angry or elated, sad or thrilled.

The deep learning genie is out now and we need to find ways to accommodate it. There is a role for technologies like deep learning working with rather than against human interests. We need to accept wabi-sabi celebrate our imperfections and recognise that art made by people blemishes, crackles variation and all really matters. That we are risking losing our humanity to an abstraction and mistaking the map for the territory:

the more I live and work online, the more I realize that, what we gain in being able to communicate across time and space, en masse, the more we lose in context, in gesture, in understanding a single person in the sea of humanity. It’s this, combined with the global scope of outrage, that’s a dangerous form of abstraction today

Qualia experienced through authentic re-engagement with the natural world in all its manifestations is the key to reacquainting ourselves with the territory.