Technological Phase Shift

Unless you have been entirely offline this year, the chances you already know quite a bit about ChatGPT, the conversational AI interface released as a free beta by Open AI seven months ago. ChatGPT offers a deceptively straightforward proposition for users without requiring them to know anything about AI. It assumes the form of a chatbot that builds on Open AI’s GPT family of large language models (LLMs). GPT-3 was covered in this blog a couple of years ago and was then described as:

an automatic writing machine capable of providing a logical, convincing continuation of whatever it was fed as input.

This seemingly innocuous capability of highly accurate next token prediction that GPT-3 offered in the form of so-called continuations was improved upon by Open AI in the period since. The result was the GPT-3.5 LLM used by ChatGPT which detonated with a huge impact globally immediately after launch. It seems clear now in 2023 that a technological phase shift has taken place and nothing will be the same again. We are now in a new mode of living with computing technology.

Countless blog posts and tweets have been published showcasing ever more wondrous things people have made in moments using ChatGPT. It’s the most important new paradigm shift in consumer computing technology since the advent of graphical user interfaces. The shockwaves have hit every single tech company who are all scrambling to develop an AI strategy. There has also inevitably been a lot of criticism. Much of it has been focussed on well-publicised examples of hallucinations where absurd answers were generate in response to what seemed like relatively easily understood questions. The tendency of deep neural networks to hallucinate has been well-known for several years amongst researchers but encountering it in the wild as a non-specialist is very disconcerting and clearly a trust breaker. However, the sceptics miss a critical point. Namely that the initial version of ChatGPT launched upon the world using GPT-3.5 under the hood was just a first iteration. This technology was always going to be subject to rapid improvement. It appears that the hallucination rate of around 15-20% exhibited by ChatGPT at launch can be reduced by using human input to score the responses and then feeding that information back to the model. Scaled up, this approach called RLHF or reinforcement learning with human feedback has generated significant improvements. Ilya Sutskever, OpenAI’s Chief Scientist and one of the creators of ChatGPT has stated:

“I’m quite hopeful that by simply improving this subsequent reinforcement learning from the human feedback step, we can teach it to not hallucinate”

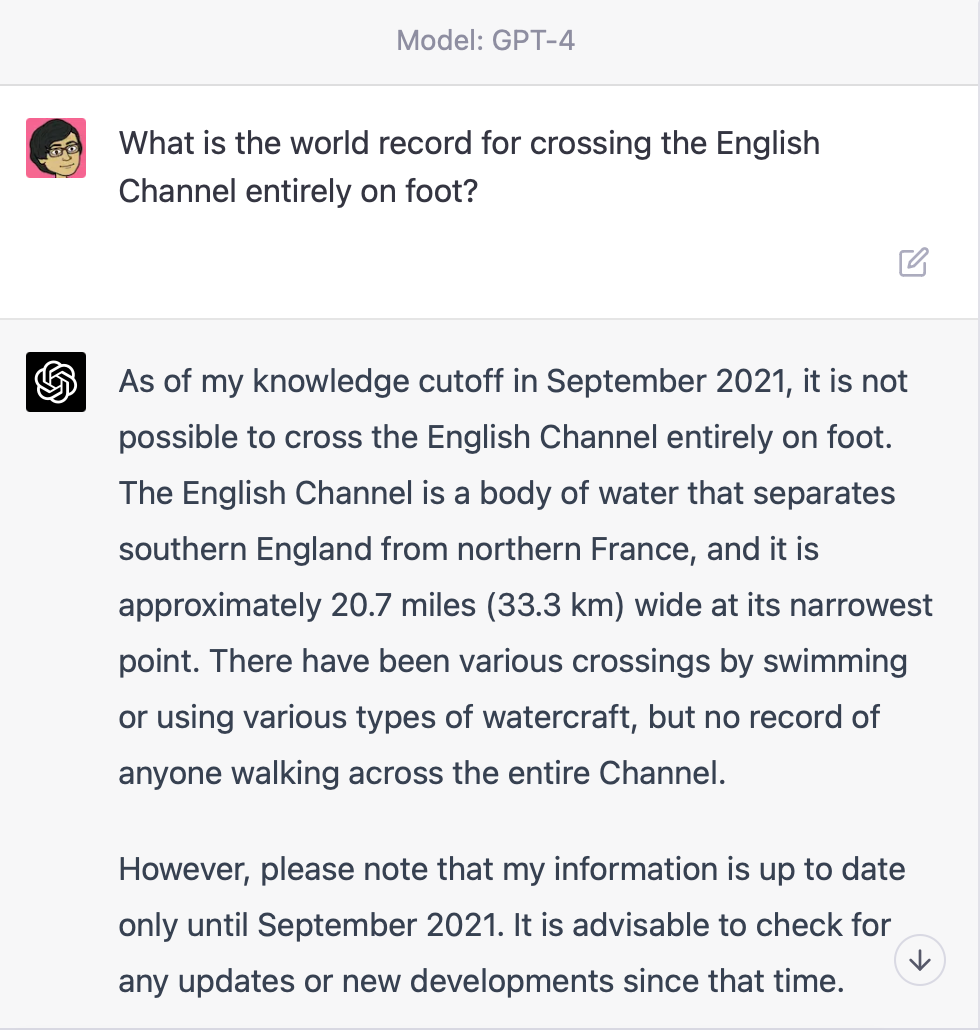

A few months after release, ChatGPT got an upgrade to OpenAI’s GPT-4 LLM which was subjected to even more RLHF during its development. The very same question used to reliably generate hallucinations in GPT-3.5 is addressed very differently by this new model:

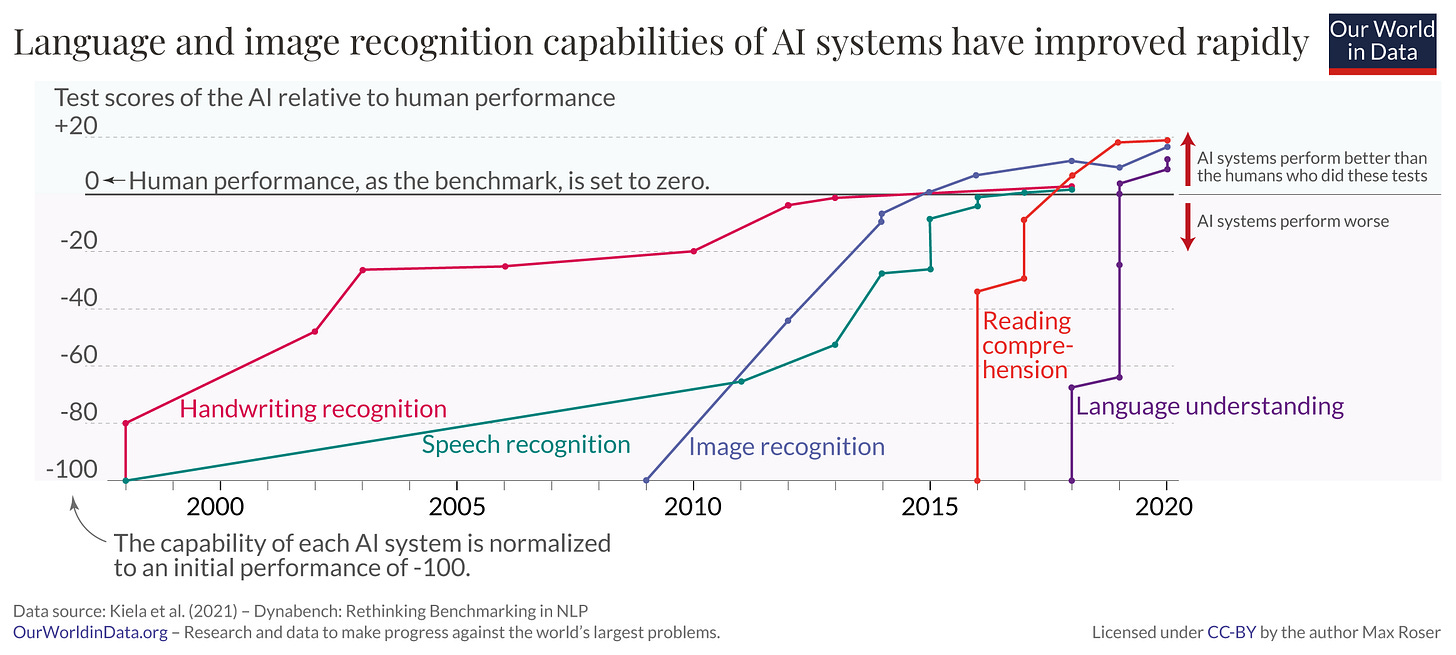

One must assume the wild mustang from launch will be tamed and become a progressively more useful horse for humanity in time. The more important realisation forced upon us by ChatGPT is that the high citadel of human exceptionalism around knowledge work, long considered impregnable, has been breached. While we debate the flaws and limitations of LLMs in the public forum, our usurpers are already inside, among us, making steady progress through the outer regions and working towards the core. There’s been a certain remorseless inevitability on the direction of travel particularly over the last decade which is dramatically captured in this graph showing the staggering improvements in AI capability from effectively nothing to greater than human performance in less than one generation:

ChatGPT and the GPT LLMs behind it have further catalysed speculation about the impact of AI technology on the world of work. Clearly there will be significant impact to many jobs that involve text-based input. Quite how much and of what shape it will assume isn’t precisely clear. As Noah Smith points out, nobody really knows. The impact of automation sits on a broad spectrum with mass unemployment at one end and improved productivity with no unemployment at the other.

The disruption of software development

Ironically software development itself is likely to be first in line to be consumed by the very technology it has helped to conceive. One imagines scenes of shock and horror on the part of those implicated in birthing this technology. The doomed inventor Sawyer on the Moon screaming I Made You at his impassive deadly robot in William M. Miller’s Sci Fi cautionary tale. LLMs will bring about a fundamental shift to software development practice akin to the switch from assembler to compiled languages. Or indeed the later transition from compiled to interpreted languages like Python. The only constant here is that line only ever goes up. The use of natural language to direct a computer to create code that makes change in the world constitutes a new form of computation. A VM addressed through natural language represents programming with words. The next computational platform. Some arenas of software development are more ripe for disruption than others. Front-end Development seems particularly vulnerable and according to one correspondent “will be replaced 100% by AI”. That’s an extreme view but we can expect significant productivity improvements through selective automation within developer workflows. The development of test code also seems very vulnerable to automation as Mike Fisher, ex-CTO at Etsy, recently pointed out in an article outlining a framework for thinking about the impact of generative AI (GAI) technology:

As impressive as the possible productivity increases are from code automation, I think the biggest impact to software development from GAI might be in automating testing.

The lionisation of the lazy programmer in tech is a powerful driving force. CoPilot is its poster child and already proving very popular with little evidence of pushback. Quite the contrary. According to a recent GitHub developer survey almost all US based software developers are already engaging with CoPilot and its peers:

AI is here and it’s being used at scale. 92% of U.S.-based developers are already using AI coding tools both in and outside of work.

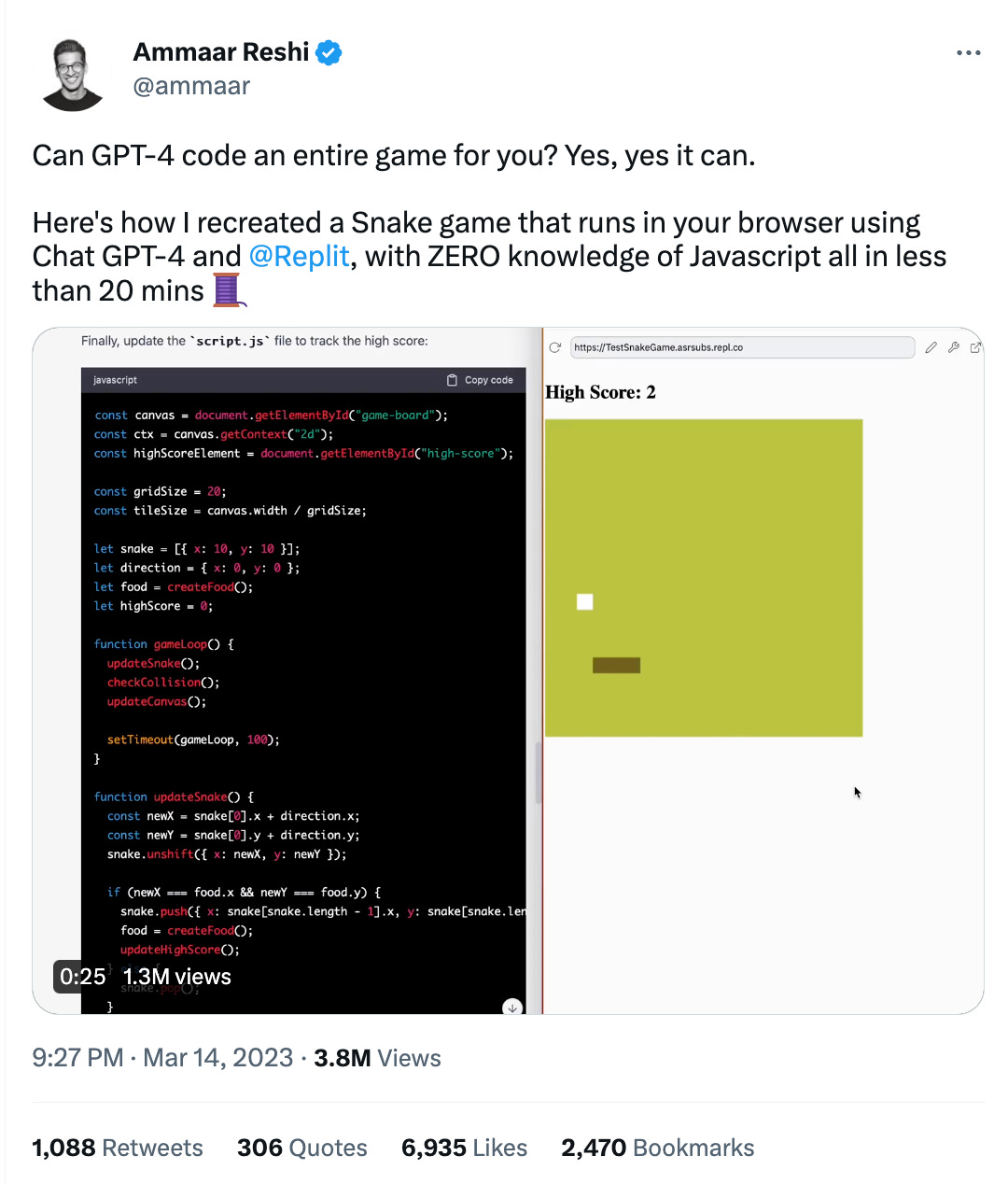

It’s not hard to understand the reasons why. ChatGPT can teach you to code as well as actually do the coding for you based on appropriate prompting. GPT-4 can code you a game in JavaScript:

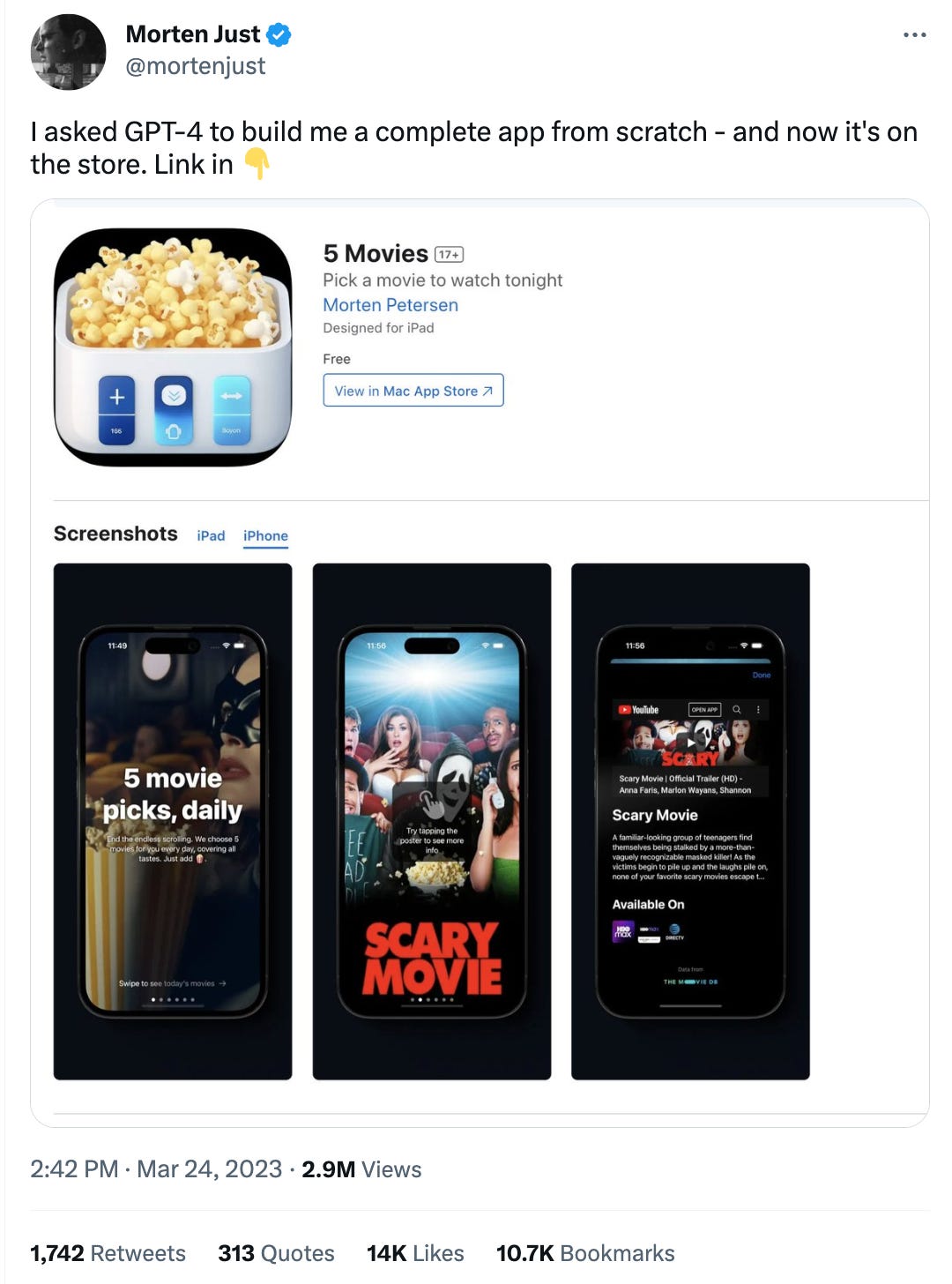

It can even code you a whole iPhone app:

Perhaps most remarkably of all, it can develop websites from hand-drawn sketches. Several developers have since combined GPT-4 with voice to go even further and create a form of Jarvis for coding able to build and deploy web apps.

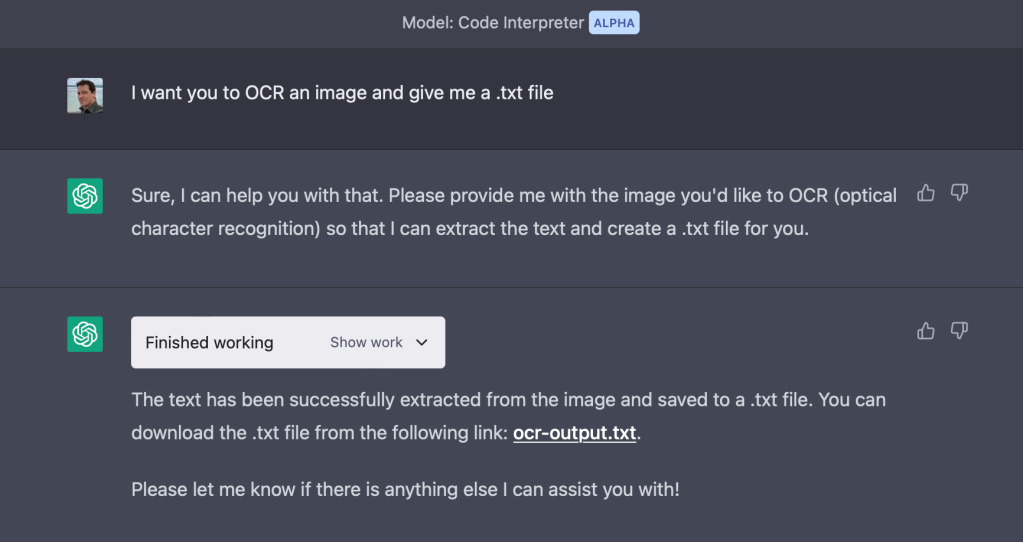

OpenAI’s ChatGPT code interpreter plugin, once mainstream, is likely to accelerate this kind of innovation. It appears to be remarkably powerful and includes a built-in ability to process images:

There has been relatively little mainstream coverage of these remarkable applications of AI in the world of software development. John Naughton wrote a stiff Guardian article that received a lot of feedback, much of it negative from people in tech likely irked by his unfortunate choice of comparison:

Programmers have always seemed like magicians: they can make an inanimate object do something useful. I once wrote that they must sometimes feel like Napoleon – who was able to order legions, at a stroke, to do his bidding. After all, computers – like troops – obey orders. But to become masters of their virtual universe, programmers had to possess arcane knowledge, and learn specialist languages to converse with their electronic servants. For most people, that was a pretty high threshold to cross. ChatGPT and its ilk have just lowered it.

This related post that Naughton links to describes the dawn of LLMs as Software’s Gutenberg moment aimed squarely at the centre of software development:

The current generation of AI models are a missile aimed, however unintentionally, directly at software production itself. Sure, chat AIs can perform swimmingly at producing undergraduate essays, or spinning up marketing materials and blog posts (like we need more of either), but such technologies are terrific to the point of dark magic at producing, debugging, and accelerating software production quickly and almost costlessly.

According to the authors, the sweet spot for LLM based AI technology are jobs which are both predictable and premised on precise grammatical rules. Examples include tax accountants, call centre staff, contract writers and, of course, software engineers:

The difference between these jobs are the corresponding packages. Software engineers today command large salaries. The proximate outcome is a predicted collapse in the cost of software development as LLMs start taking on more of the base production work:

Matt Welsh the founder of fixie.ai reaches the same conclusion in a provocative interview with Danny Fortson on the irresistible rise of what he terms “the 12 cent engineer”.

Personal adventures

My own adventures using GPT-4 to assist with development suggest it can certainly help support development and help productivity if used appropriately. The three areas I found it most useful were with discovery, assisting with boilerplate for tests and code review.

1. Discovery

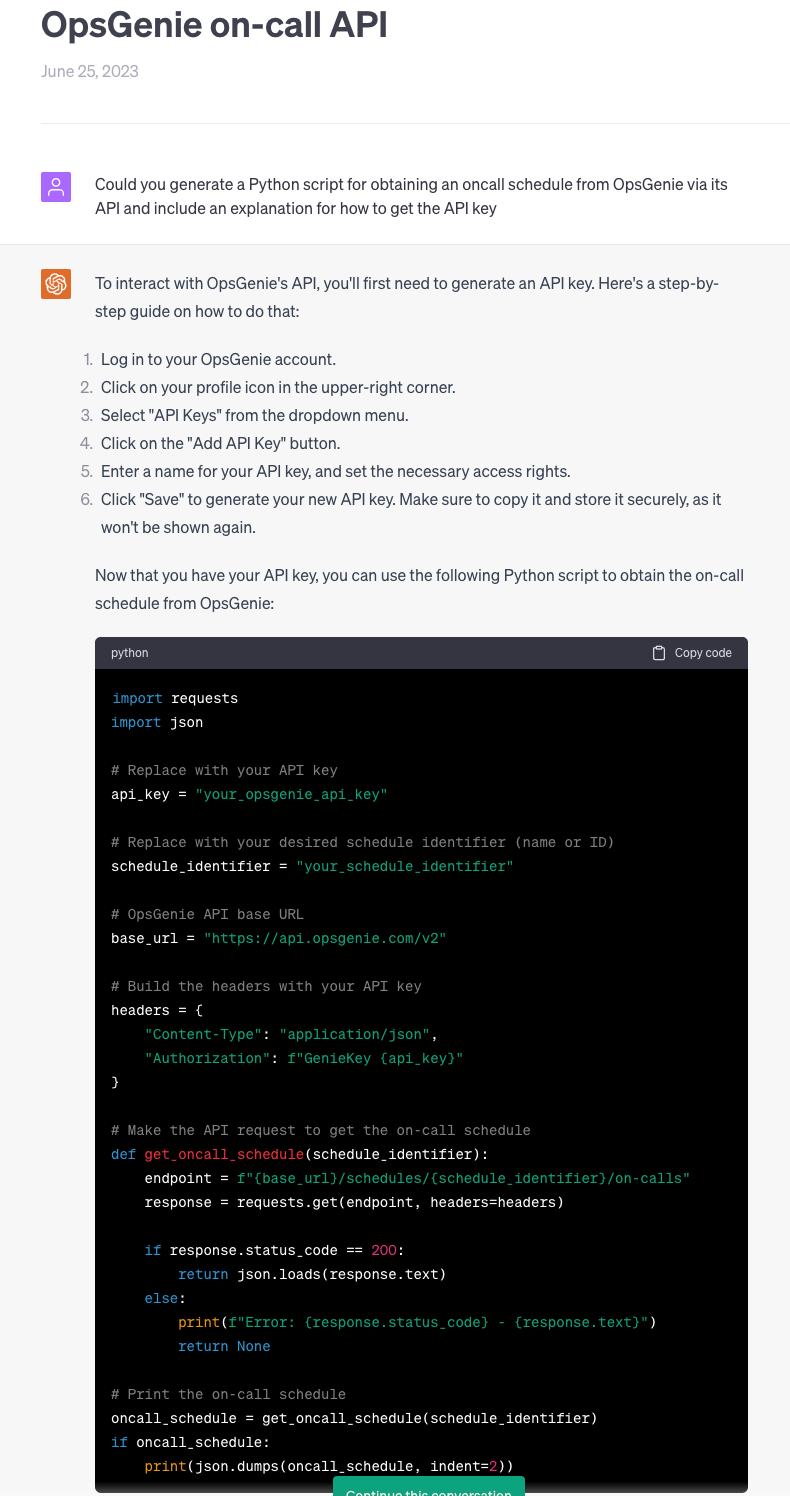

I was recently conducting an evaluation of OpsGenie and wanted to understand how to access an on-call schedule using the official API. I used ChatGPT to generate me the corresponding Python code per the conversation here. That code worked once I obtained the API key. Using ChatGPT here saved me time that would have been spent fiddling about with requests and reading documentation.

2. Boilerplate test code

On another recent project, I built an experimental open source tool called orgcharts to generate organisation diagrams from YAML using networkx. After a little development I wanted to start building out the corresponding pytest test code. I asked ChatGPT to generate a starting point template for me by passing in the code developed to that point. It duly delivered per the conversation here. This use case felt particularly useful and beyond what one can do with tools like CoPilot. There ought to be less opportunity for GPT-4 to hallucinate here as you pass in specific instructions and code.

3. Code review

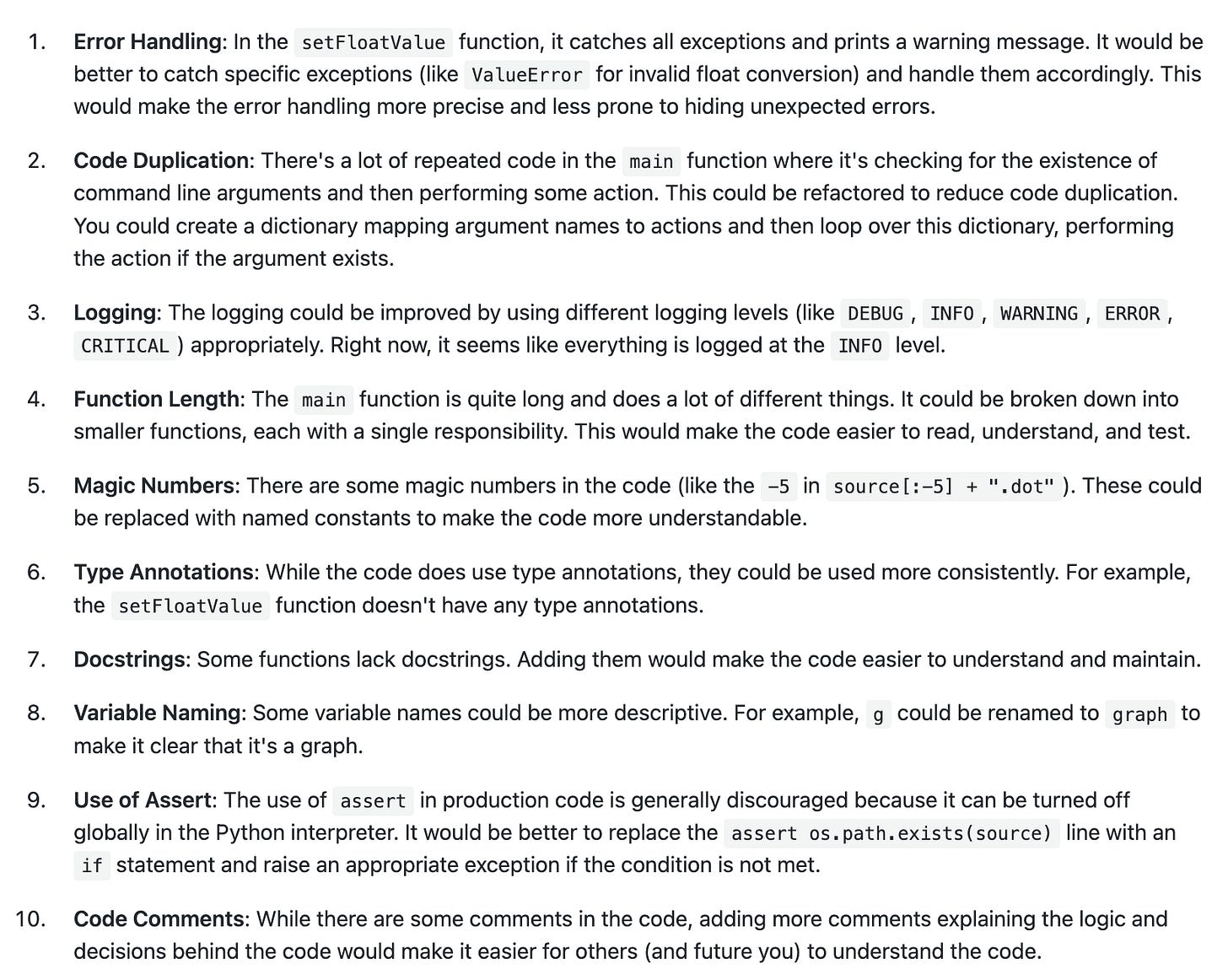

After building out my orgcharts tool without any external input, I wanted a code review undertaken. Using the ChatGPT Web Requests plugin and pointed it at the main Python file. The corresponding response was a genuine surprise. An acerbic reviewer emerged with a distinct tone of voice exhibiting doctrinaire tendencies. The suggestions provided, however, were excellent starting points. ChatGPT in this mode could be very useful for helping to improve your code as long as it’s open source:

Whither software development?

LLM technology is bound to impact software development. The job will continue to exist but there will be some change in what the role involves with AI performing the role of a smart assistant. A Human-Assisted AI (HAAI) paradigm likely to dominate as more developer tooling integrates AI support. As the GitHub survey data reveals, the path to the future has already crept uneasily towards a Brautigan vision of humans “all watched over by machines of loving grace”. A centaur; half human, half machine. AI can provide a glimpse into a world where we are turned into one The Avengers and who doesn’t want that? Simon Willison has written persuasively about this topic. Of course people will want the magic sauce if it makes them more productive than the competition. He calls it AI-enhanced development.

This IEEE hosted post, however, offers a far more sceptical response, warning that AI-generated code is ultimately just a confabulation like everything else spouted by LLMs.

Large language models create a conundrum for the future of programming. While it’s easy enough to create a fragment of code to tackle a straightforward task, the development of robust software for complex applications is a tricky art, one that requires significant training and experience. Even as the application of large language models for programming deservedly continues to grow, we can’t forget the dangers of its ill-considered use.

Forrest Brazeal is also not overwhelmed by the prospect of AI coding suggesting that all AI is good for is boilerplate related tasks and not things that really slow down developers which are mostly related to workplace pathology:

As others have observed, LLMs definitely have a role to play in the future in software development. Today their impact is largely being felt through the GPT family of models with many developers engaging through use of ChatGPT. LLMs will increasingly be integrated in developer tooling and provide support at different points throughout the workflow. Their introduction is unlikely to result in mass technological unemployment amongst the ranks of software engineers but the nature of work will change. Those developers that don’t embrace that change and learn to engage with all the new tools are likely to get left behind over time. The next stage is therefore the arrival of an programmer’s assistant to assume the role of interpreter and executor of human developer commands. A return to a more ancient time in a sense, a world of magic where speech and thought are converted to code that will inevitably be executed too at some point soon. Such wonders would have been indistinguishable from spells and incantations for our forebears and maybe will be for many alive today that see it. In a sense AI is creating a new form of Daimonic Reality that exists outside our normal understanding of what is natural. In the realm of the supernatural. Spells can be negative too; the namshub from Snowcrash was fatal. It’s very likely that the output from AI coding assistants will wreak havoc in the world if blindly adopted without scrutiny. It pays to be sceptical or at least trust but verify. In this world, it would be wise to remember the cautionary tale of the Sorcerer’s Apprentice, in the guise of a coding assistant. The Source-rer’s Apprentice if you like introducing chaos into code through means we are yet only dimly aware of:

The worst A.I. risks are the ones we can’t anticipate. And the more time I spend with A.I. systems like GPT-4, the less I’m convinced that we know half of what’s coming.